Building a Customer Radar with OpenClaw

Building a Customer Radar with OpenClaw

Instead of Shouting Into the Void

I've been working on a few different products at the same time lately: BlackOps, VoiceCommit, and VitalWall. That's partly because I enjoy building, and partly because I haven't yet been forced to pick just one. Each of them solves a problem I personally care about, and each of them feels reasonable in isolation.

What I didn't have was a good answer to a harder question: which one of these actually matters to anyone else.

From the outside, it probably looked like progress. I was shipping features, writing about what I was doing, and posting updates as I went. Internally, though, I knew I was missing something. I had opinions about which product should win, and I had momentum in the sense that code was moving forward, but I didn't yet have any real evidence that the market cared.

That's a subtle problem, because activity feels like traction when you're the one producing it.

At some point I realized I was treating "build in public" as if it were the same thing as listening. It isn't. Writing about what you're doing can look like engagement, but most of the time it's still just broadcasting. If I actually wanted to know which of these ideas had a pull outside my own head, I needed something that paid attention to what people were already saying instead of amplifying what I wanted to say.

So instead of trying to get better at marketing, I did the thing I'm more comfortable with: I built a system.

The goal wasn't to automate posting or to manufacture interest. It was to give myself a way to notice patterns without relying on my own mood or memory. I wanted something that could sit quietly in the background and tell me when the same kinds of problems kept showing up in real conversations.

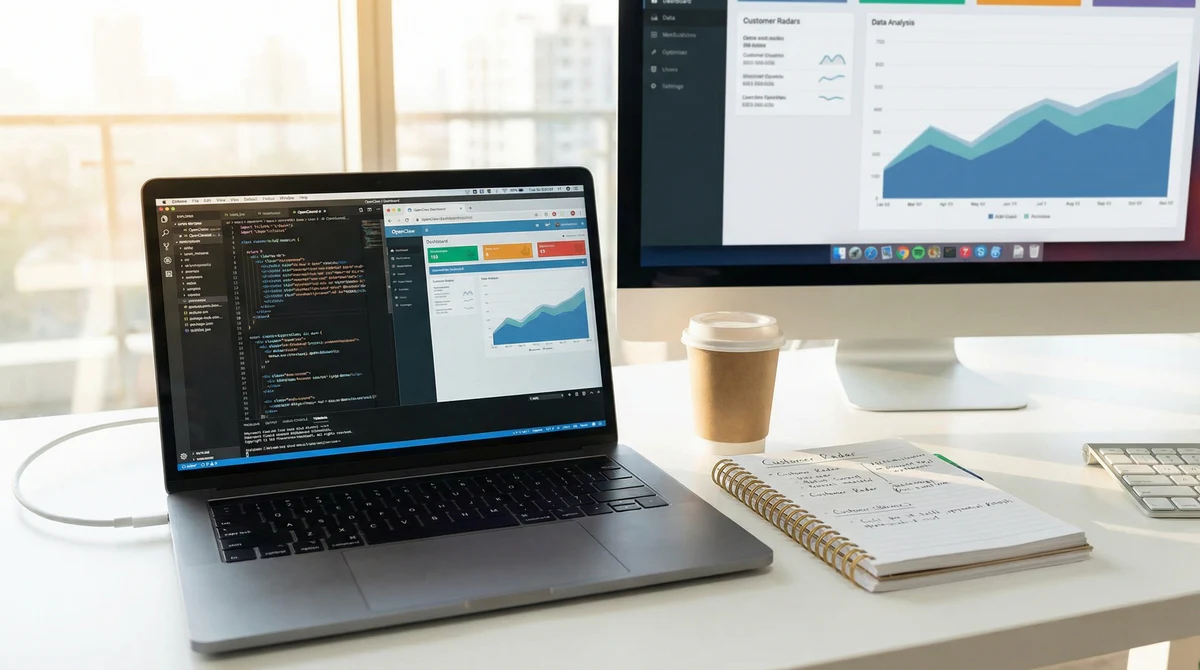

That's what I mean by a customer radar. And OpenClaw turned out to be a practical way to build one.

Why OpenClaw Made Sense for This

What interested me about OpenClaw wasn't the idea of an agent with a personality. I wasn't looking for something that could talk like me or pretend to think like me. What I needed was something closer to infrastructure: a way to run small, focused processes continuously without turning them into a product demo.

The useful part of OpenClaw, for me, was that it could watch things over time. It could look at conversations, summarize what was happening, and send that information somewhere I would actually see it. That meant I could stop relying on random scrolling or memory and instead treat discovery as something that ran whether I was paying attention or not.

I wasn't trying to build an autonomous marketer. I was trying to build sensors.

The Question the System Is Meant to Answer

Everything in this setup exists to answer one question: which of these products, if any, is the world pulling on right now?

Not which one I like the most. Not which one feels the most impressive technically. Which one shows up in real language when people talk about problems they're having.

That framing matters, because it keeps the system grounded in how people actually describe their situation instead of how I describe my ideas.

Listening for Pain Instead of Guessing

The first part of the system looks outward. It scans places like X and Reddit for people talking about things that overlap with the problems I'm working on. That might be frustration with writing consistently, trouble keeping track of ideas, or confusion about what's really happening on their site.

When it finds something relevant, it doesn't respond automatically and it doesn't try to sell anything. It sends the original post into Slack along with a short explanation of why it might matter and a few possible ways I could reply.

The point is not speed or scale. It's replacing random discovery with deliberate observation. Instead of hoping I notice the right conversation at the right moment, I get shown the moments where someone is already expressing a problem in their own words.

Turning Work into Something You Can Talk About

The second part of the system looks inward instead of outward. It watches what I'm actually doing: features I ship, bugs I hit, things that annoy me, small workflow changes that make a difference.

That information becomes the raw material for writing. Instead of asking myself what I should post about, I can look at what I already worked on and explain why it exists.

That keeps the narrative tied to reality rather than to whatever sounds good that day. It also removes some of the pressure to perform. I'm not trying to invent a story. I'm just describing what happened and why it mattered to me.

Making the Blog Point Somewhere

I write long-form posts about AI, leverage, and the way I structure my work. On their own, those posts are just arguments. They don't create a relationship unless there's a next step.

So another part of the system reads my blog posts and asks what problem they're really about and whether one of my products fits naturally as a follow-on.

It doesn't add popups or tricks. It just forces the question of alignment: if someone agrees with what I wrote, what would make sense for them to try next?

That question is uncomfortable in a useful way. It makes it obvious when something I've written is interesting but disconnected from anything I'm actually offering.

The Part That Keeps Me Honest

The last part of the system is the one I trust the most, because it's allowed to be boring.

It aggregates what the other parts see and produces a daily summary of whether any of the products are showing up at all and in what way.

Some days, that summary is uninteresting. It might say that none of the products have strong signal yet. That isn't failure. It's calibration. It keeps me from projecting momentum onto activity just because I've been busy.

If there's no pull, I want to know that. It's better than assuming there is one and acting accordingly.

What This System Does Not Do

This system does not post for me. It does not DM people. It does not try to create excitement. It does not pretend traction exists when it doesn't.

Sometimes what it produces is simply the observation that people aren't talking about these problems yet.

That's still useful, because it tells me to keep listening or to change what I'm building or to rethink how I'm describing it instead of just increasing volume.

It gives me evidence instead of reassurance.

What Changed for Me

The biggest change has been psychological. I stopped trying to convince people that I had something worth paying attention to and started waiting for people to show me that they needed something.

I stopped guessing and started measuring. I stopped treating marketing as expression and started treating discovery as instrumentation.

That shift feels closer to how I think about software in general. The systems I trust most are the ones that observe and adapt quietly instead of demanding attention.

Wrapping Up

OpenClaw happened to be the tool that made this setup possible for me, but the deeper idea doesn’t depend on any specific platform. What matters is treating customer discovery like an engineering problem instead of a performance problem.

As developers, one of the things we’re good at — maybe the thing we’re trained to do — is build feedback loops. We use them in code all the time. Applying that same mindset to figuring out whether anyone actually wants what we build has made a noticeable difference for me. Instead of trying to be louder or more interesting, this approach lets me see whether the same kinds of problems really show up again and again and where they show up.

That’s a slower way to work, and it doesn’t feel as exciting as a big spike of engagement, but it feels more honest. Building a customer radar hasn’t told me what to build. It’s told me what to pay attention to.

If you’re curious about how this plays out in practice — the signals it finds, the ones it doesn’t, and what changes because of it — then subscribe to the newsletter. I’ll share updates as this system actually earns them.

I wrote this post inside BlackOps, my content operating system for thinking, drafting, and refining ideas — with AI assistance.

If you want the behind-the-scenes updates and weekly insights, subscribe to the newsletter.